Mecanum Wheel Based Autonomous Navigation Mobile Robot

Overview: This project presents the design, development, and testing of a mobile robot powered by mecanum wheels for omnidirectional navigation. The system integrates precision mechanical components with advanced sensor fusion and control algorithms to achieve accurate, holonomic motion in complex environments.

Abstract

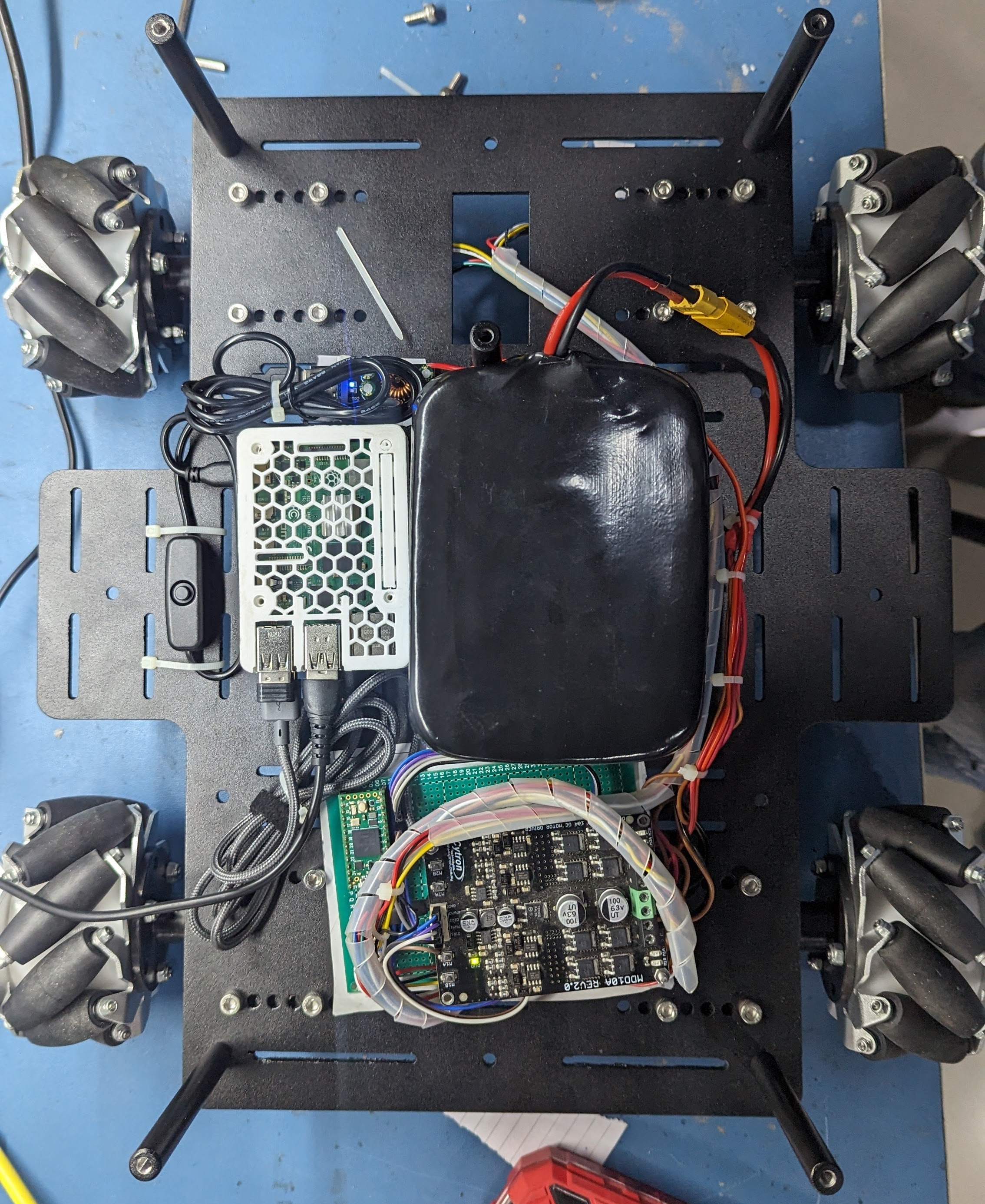

The project involves a sophisticated design and implementation of a mecanum wheel based autonomous robot. Precision laser-cut metal components were assembled and powder coated in black to ensure durability and aesthetics. Driven by 60 rpm Rhino motors delivering 40 kg-cm of torque and featuring high-resolution encoders, the robot’s drive system is capable of executing omnidirectional movement with high precision. A Raspberry Pi 4 serves as the central processing unit, running ROS atop Raspberry Pi OS. An RPlidar A1M8 sensor provides 360° environmental scanning, while custom firmware running on a Teensy-controlled PCB converts ROS velocity commands into PWM signals using PID control. Sensor fusion of lidar data and wheel encoder feedback is employed to enhance localization and generate accurate odometry, enabling robust mapping via GMapping and holonomic navigation using a TEB planner.

Introduction

Autonomous navigation for mobile robots demands precise control, robust sensor integration, and efficient mapping algorithms. In this project, a mecanum wheel configuration is used to achieve full omnidirectional movement, which is critical for navigating cluttered and dynamic environments. By integrating a multi-layered sensor suite—including an RPlidar for environmental scanning and wheel encoders for motion feedback— along with a Raspberry Pi 4 running ROS, the system overcomes challenges such as wheel slippage and non-holonomic constraints. This report details the entire development process from mechanical assembly to firmware implementation and experimental validation.

Hardware Setup

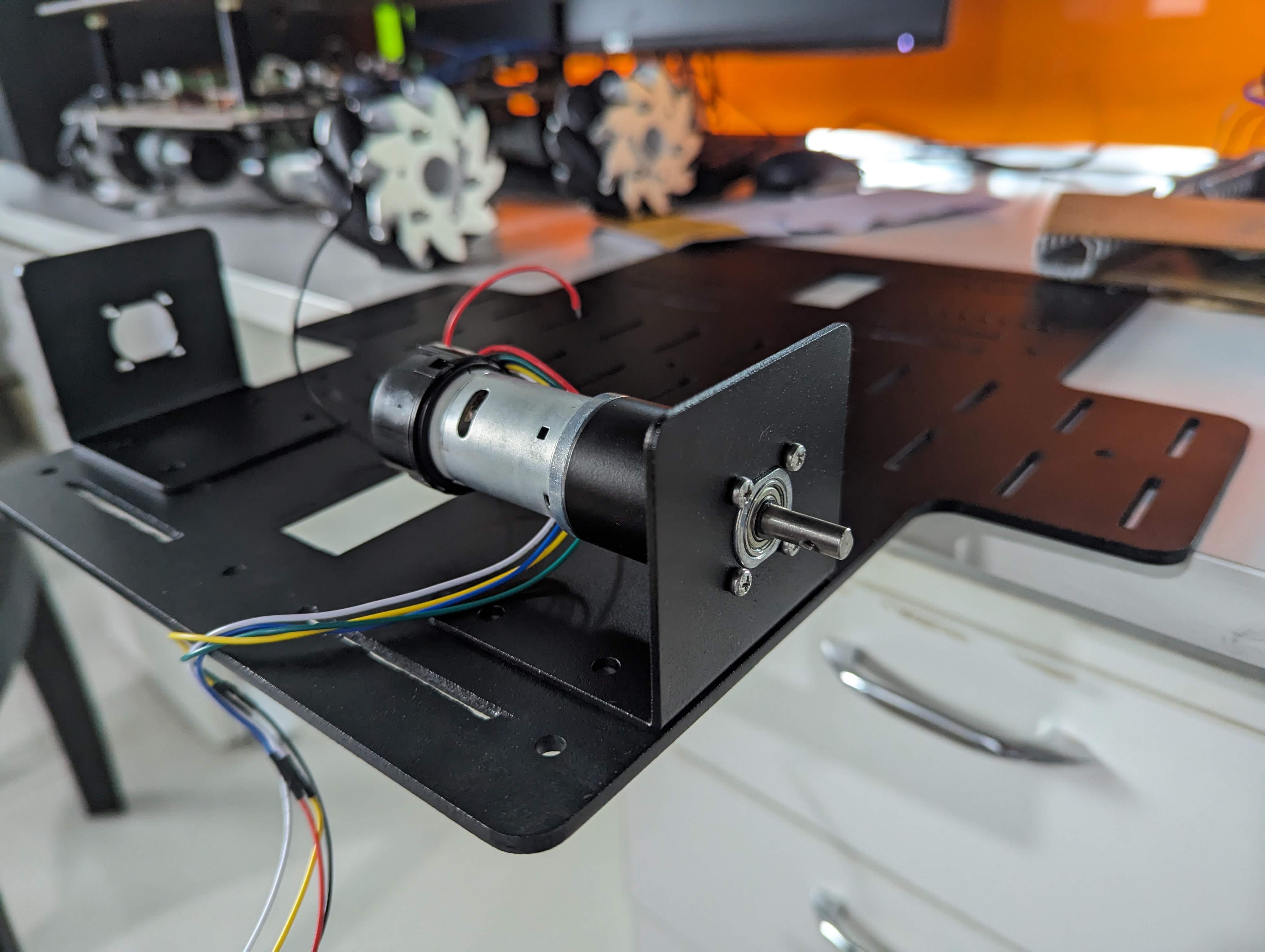

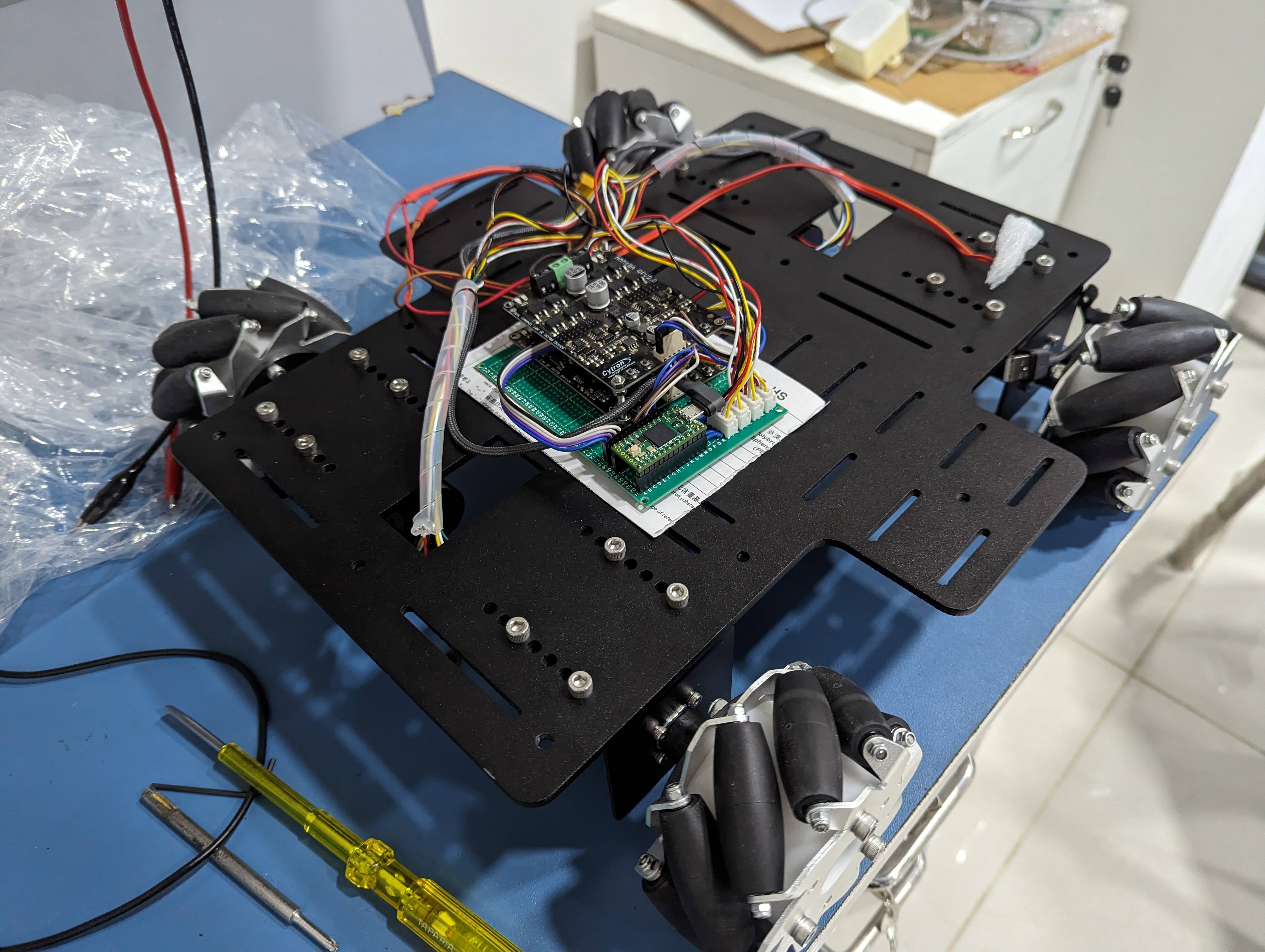

The build commenced with the fabrication and assembly of laser metal-cut parts. The chassis, powder coated in black, provided a rigid, lightweight frame for the robot. Key hardware components include:

- Chassis Assembly: Precision metal parts were laser cut and assembled to form the robot’s frame. The body was powder coated in black to enhance corrosion resistance and aesthetics.

- Drive System: The robot is powered by 60 rpm Rhino motors that deliver 40 kg-cm of torque and offer high encoder resolution for precise feedback.

- Electronic Control: A perfboard-based PCB integrates a Teensy microcontroller with headers for motor encoder inputs. A Raspberry Pi 4, running Raspberry Pi OS with ROS, serves as the central “brain” of the system.

- Sensing: An RPlidar A1M8 provides 360° lidar scanning for mapping and obstacle detection.

Software and Firmware Implementation

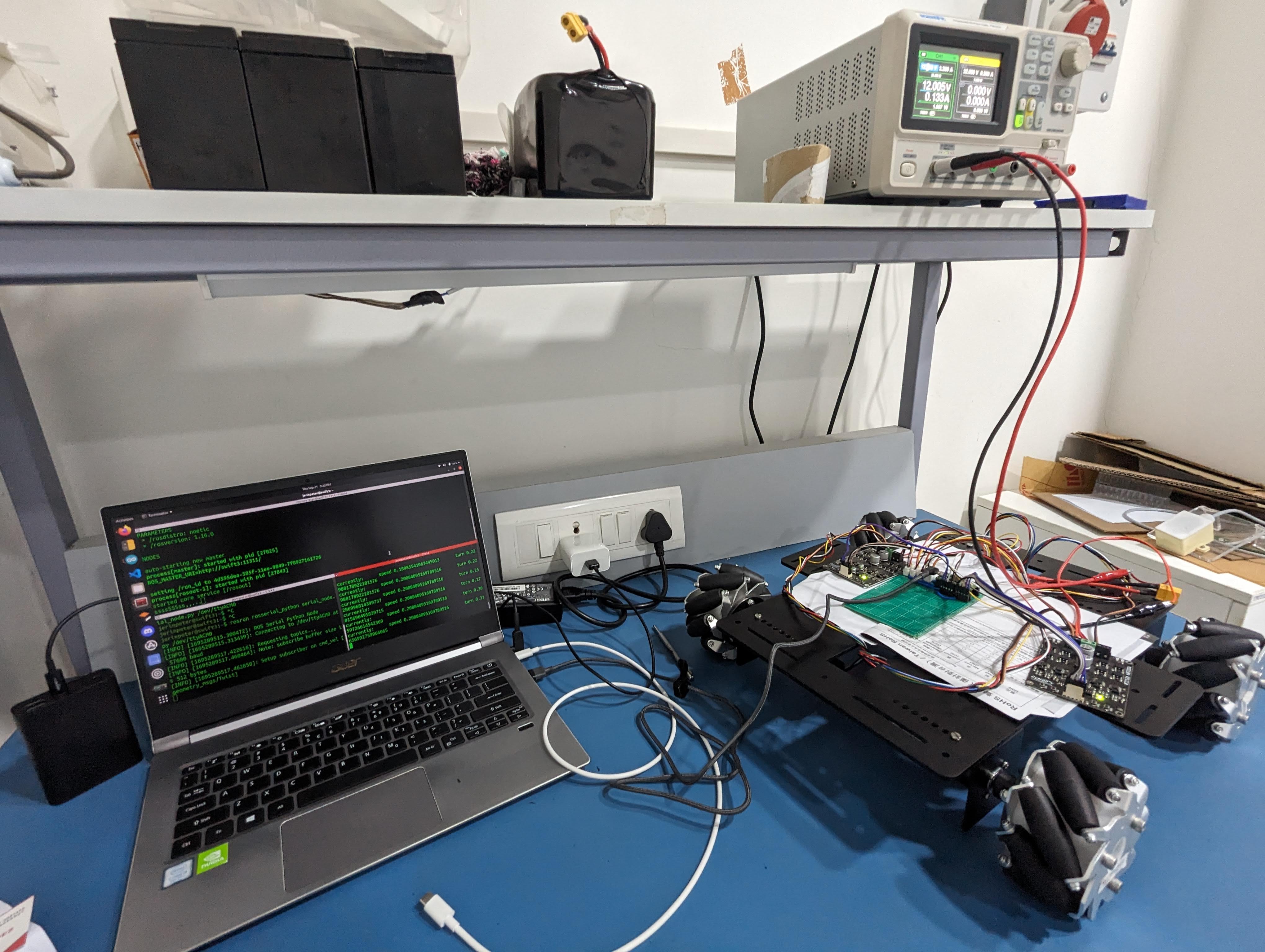

The base firmware, developed in C/C++, subscribes to the cmd_vel topic in ROS and decomposes velocity commands into PWM signals.

A PID controller regulates motor speed to ensure precise actuation. Simultaneously, encoder feedback is processed to compute real-time robot velocity in the X, Y, and Z axes,

which is published back to the Raspberry Pi. A Python node aggregates these signals to publish refined odometry data.

Sensor fusion is performed by integrating lidar odometry with wheel encoder data, significantly enhancing localization accuracy.

The system employs GMapping for generating occupancy grid maps and a Timed Elastic Band (TEB) planner for executing holonomic navigation.

Experimental Validation

Extensive testing was conducted to validate the performance of the robot. Multiple Python scripts were developed to send velocity commands for executing predefined trajectories. The sensor fusion algorithm ensured that odometry feedback was highly accurate, enabling the robot to execute complex maneuvers with precision. Experimental results confirmed the robustness of the control system and the efficacy of the PID loops in maintaining desired motor speeds.

Conclusion

The Mecanum Wheel Based Autonomous Navigation Mobile Robot project demonstrates a successful integration of advanced mechanical design, precision control, and sensor fusion techniques. The robust drive system, combined with effective firmware and ROS-based software architecture, enables reliable omnidirectional navigation in complex environments. Future work will focus on further refining the localization algorithms, integrating additional sensors for improved environmental perception, and scaling the system for broader applications.

Future Scope

- Enhanced sensor fusion using additional vision-based systems.

- Integration with advanced ROS navigation stacks for dynamic re-planning.

- Optimization of PID parameters for even greater precision in varying terrains.

- Development of an intuitive user interface for real-time teleoperation and monitoring.

For More Photos and Videos Visit

Google Photos