Self-Driving Car Project

Advanced Autonomous Driving Using Open-Source Frameworks

This project presents a comprehensive implementation of a self-driving car system using open-source software and hardware. It integrates perception, decision-making, and control modules to achieve autonomous navigation in a simulated environment.

Abstract

This project implements a self-driving car system that combines computer vision, sensor data processing, and advanced control algorithms using ROS. The system leverages a modular architecture to detect road features, make real-time decisions, and safely navigate through simulated environments. Extensive testing has validated the system's performance under various conditions.

Introduction

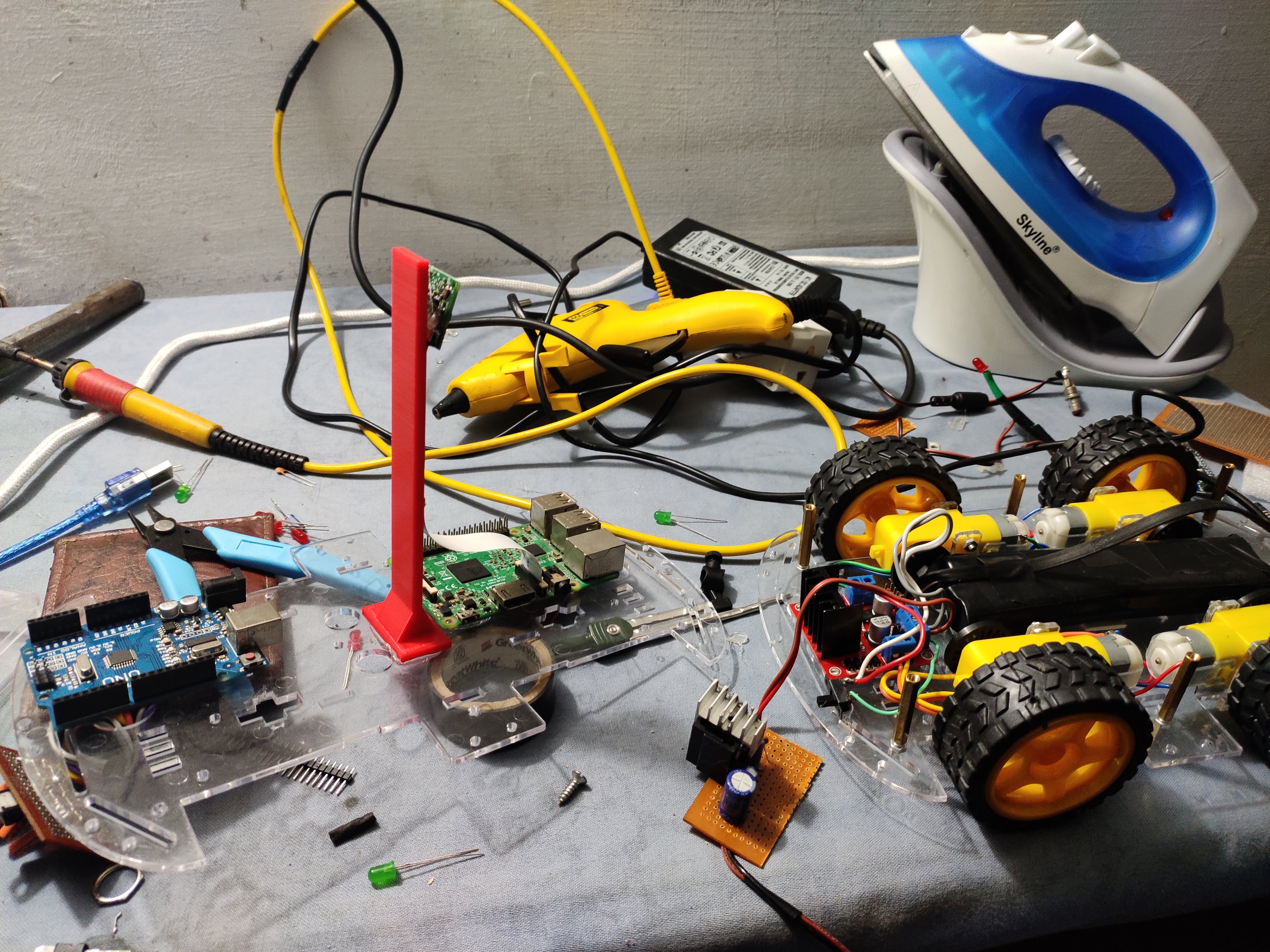

Autonomous driving has become a cornerstone of modern robotics and artificial intelligence research. This project, developed using the selfdrivingcar-master codebase, demonstrates how open-source frameworks and standard hardware components can be combined to create an effective self-driving system. Through a series of iterative tests and refinements, the system has been optimized for robust perception, decision-making, and control.

Methodology

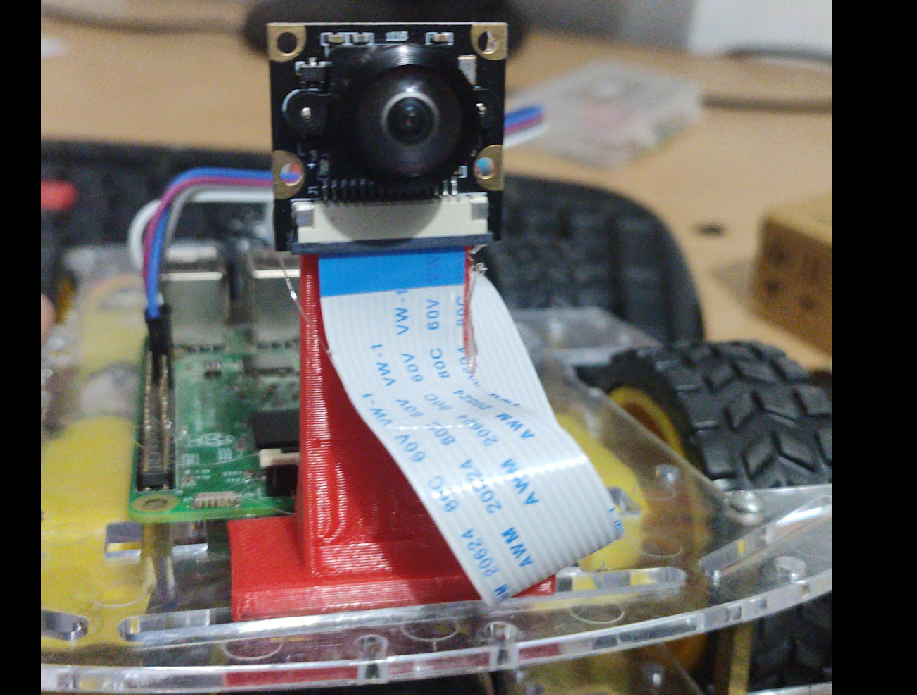

The project is organized into several key modules:

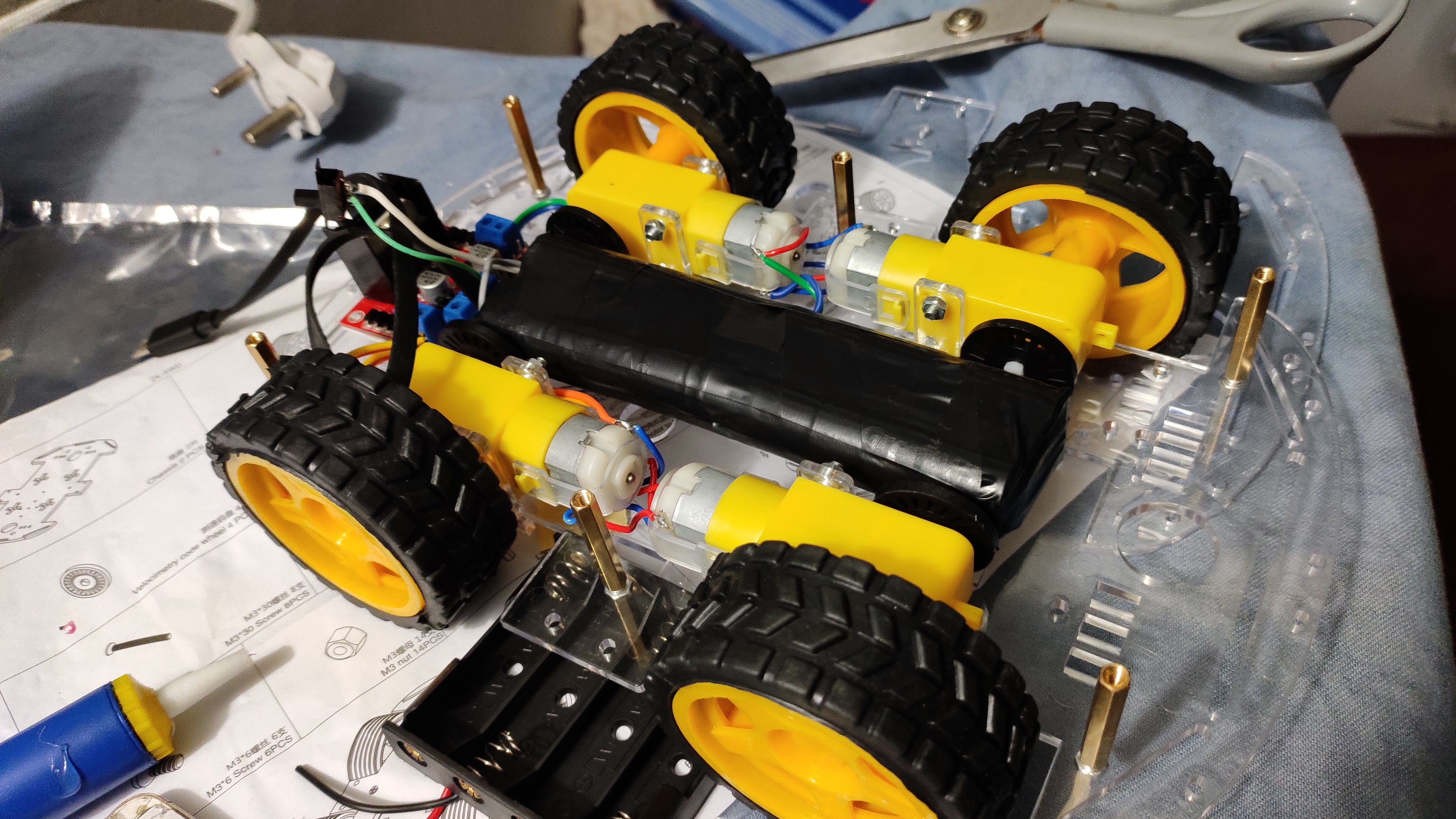

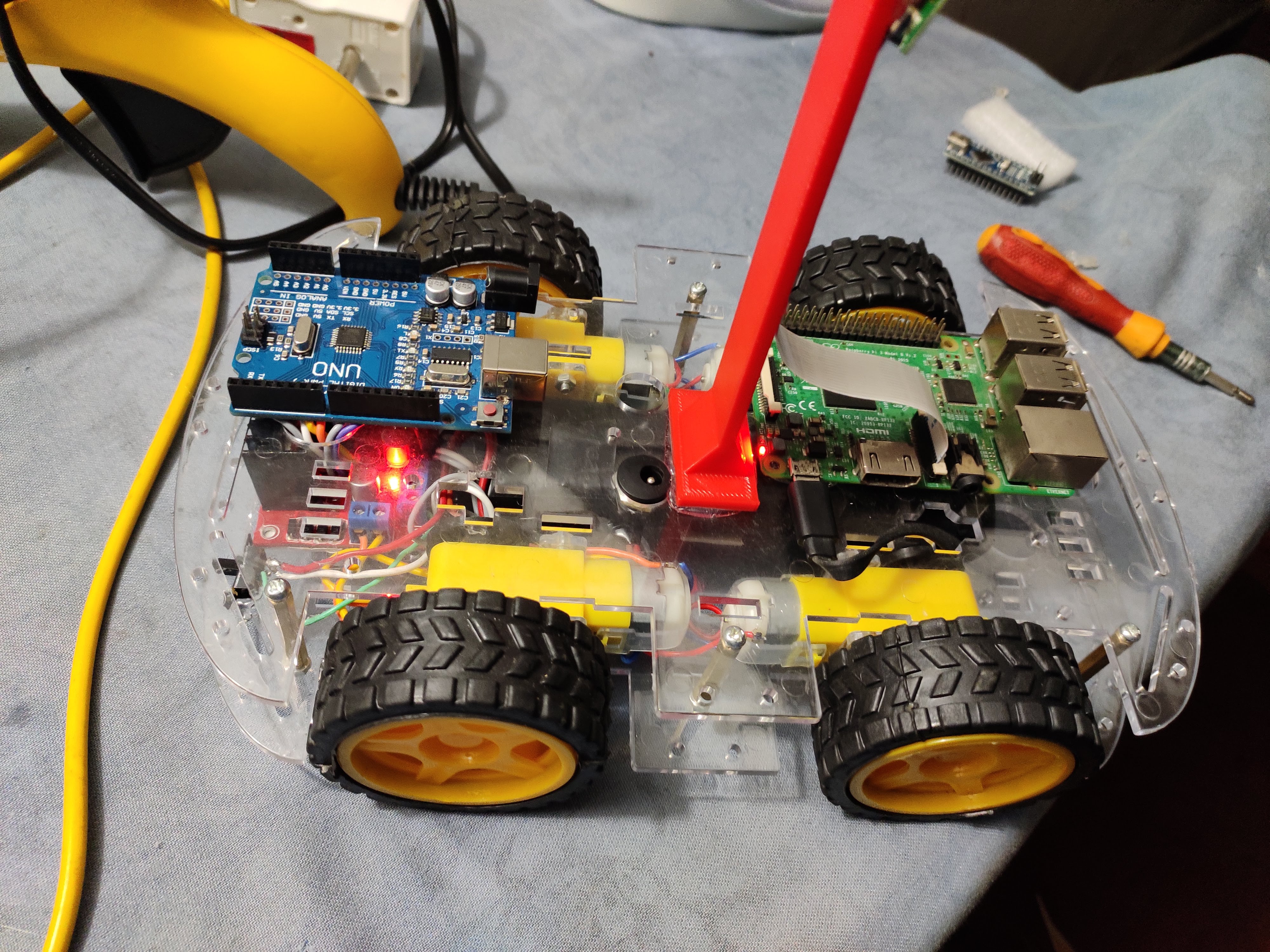

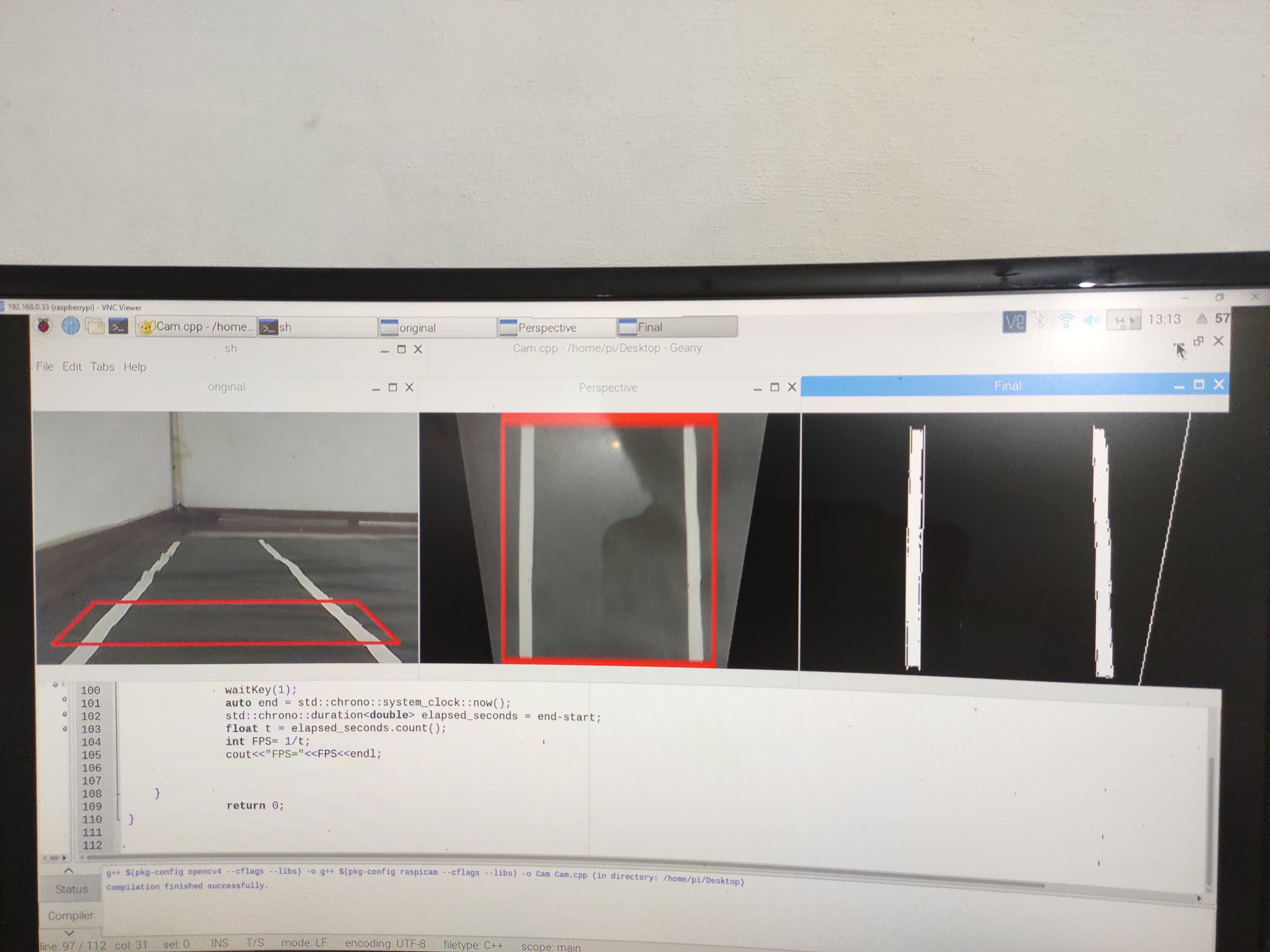

- Perception: Utilizes camera feeds and sensor data to detect road lanes, obstacles, and traffic signals.

- Decision-Making: Implements path planning algorithms that generate safe and efficient trajectories.

- Control: Employs PID controllers to convert high-level commands into actuator signals for steering and throttle control.

- Software Integration: The system is built on ROS, enabling modular communication between perception, planning, and control nodes.

System Architecture

The self-driving car is developed with a modular design, where individual nodes in ROS communicate seamlessly to process sensor inputs, perform object detection, plan trajectories, and execute control commands. The architecture supports scalability and real-time decision-making in complex scenarios.

Experimental Results

The self-driving car was tested extensively in simulation, where it successfully navigated complex routes, detected obstacles, and adhered to traffic rules. The performance metrics indicate a high level of robustness and efficiency in autonomous decision-making.

Conclusion and Future Work

This project demonstrates that a fully autonomous self-driving system can be developed using open-source tools and modular hardware. Future work will focus on refining perception algorithms, incorporating additional sensors for improved environmental understanding, and testing the system under more diverse and challenging real-world conditions.